Why Engineering Efficiency Should Win the Dev Productivity Debate

It's time to settle the McKinsey productivity debate.

McKinsey claims to be able to measure it, Gergely Orosz and Kent Beck think they got it wrong, and Martin Fowler says you can’t measure it, period. Yes, I’m talking about developer productivity.

The problem is that as this dev-productivity debate unfolds, we've already ceded important ground: the framing of the topic.

It’s not a surprise that we’re thinking about this the wrong way; the mythos of the hoodie-clad dev making magic late at night is powerful. But the truth is, engineering is a team sport once you’re past the early stages of a startup. Reframing the debate to focus on the efficiency of teams, instead of punishing individual developers for nebulous perceived productivity data is a necessary step.

So let's talk about engineering efficiency - and why it matters.

What McKinsey gets right—and wrong

Look, it’s great that companies like McKinsey care about making engineering more efficient, but they missed the forest for the trees — particularly by focusing on individual developer’s productivity. That said, they did get some things right, such as endorsing DORA metrics as the definitive baseline for DevOps practices. McKinsey’s embrace of DORA visibility for teams has been proven and tested in the real world.

Data: We saw major gains across our study when teams were given real-time access to their DORA metrics alongside other key workflow and efficiency metrics. These improvements came in the form of key leading and lagging indicators:

PR sizes improving (decreasing) by 33.2%

Cycle time improving by 47.27%

Review time improving by 46.82%

Pickup time improving by 48.75%

McKinsey also brought a necessary spotlight on the symbiotic inner and outer loops in development processes:

Importantly, they also brought in the business-minded perspective of CEO/CFOs, underscoring the need to evaluate and augment engineering efficacy quantitatively.

I know some of you reading this will push back on that third point, and trust me, I have concerns about their methodology and approach too.

However, we need to accept the truth: we have to measure the success of our engineering teams. Sure, during the wild bull run of the last 15 years you didn’t always need to, but conditions have changed. CEOs and board members will not be willing to return to a world where they don’t have data-driven insights into how their engineering teams function.

This is why it’s so important that the conversation around the efficiency and productivity of engineering teams be focused on the right things, and to take a team-centric approach.

This cannot be overstated: Individual developer metrics can wreak havoc on teams. Software development isn’t a solo race but a relay. Individual metrics often inadvertently foster competition over collaboration or encourage the wrong behaviors.

That’s not the only thing McKinsey and others miss:

They oversimplify senior developers' roles coding. Senior devs are pillars of mentorship, guidance, and quality control.

McKinsey made a mistake by relocating security considerations to the peripheries. In today's dynamic threat landscape, proactive “security-first” practices are non-negotiable.

How Kent Beck & Gergely Orosz improved McKinsey’s approach

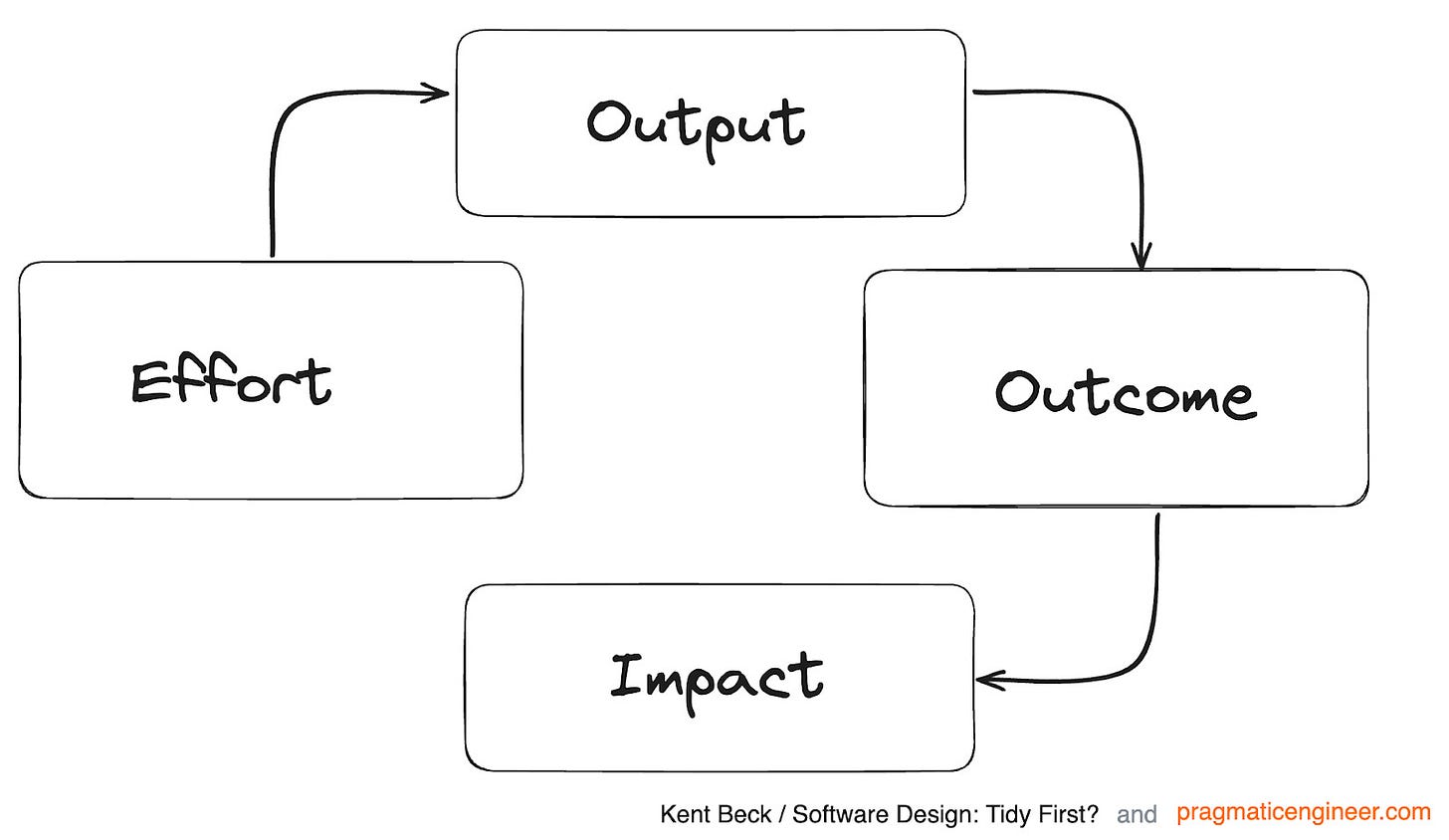

The collaborative two-part response by Beck and Orosz was a breath of fresh air, plugging many holes left by McKinsey. For example, leveraging the effort-output-outcome-impact framework provides a thoughtful and structured approach to metrics selection (the SPACE framework can help here, too).

Gergely and Kent also recognize the pivotal role of Developer Experience (DevEx) in fostering efficiency as it’s shown proven ROI for engineering orgs. One such example is streamlining the pull-request and code-review process to help developers get their code reviewed and merged more frequently.

Data: We studied engineering teams who had already adopted a DORA-based engineering metrics program who then applied gitStream automation & policy-as-code to facilitate efficient code reviews. Those teams saw compounding benefits.

In addition to the improvements from introducing DORA(mentioned earlier), teams who improved DevEx workflows around code review saw:

PR sizes reduced by an additional 28.18%

Cycle times shortened by an additional 61.07%

PR review times improved an additional 38.14%

Pickup times decreased by an additional 56.04%

However, there were some crucial areas that I wish Kent and Gergeley had also addressed:

The positioning of DORA metrics, especially CFR. Metrics, while critical, need alignment with overarching business goals.

Avoiding effort measurements like code-review time entirely is a missed opportunity. While individual evaluations can be detrimental, collective effort analytics can uncover invaluable insights into team dynamics and bottlenecks.

The business imperative from a CFO/CEO perspective to link engineering milestones with business ROI — essential to fostering organizational alignment.

What the data & devs are telling us: Efficiency is a better goal than “Productivity”

The term “developer productivity" often elicits images of a lone dev churning out line after line of code. However, in today's world, development is rarely an isolated task.

Shifting from the singular “Developer Productivity” to the more encompassing “Dev Team Efficiency” or “Engineering Efficiency” isn’t just semantics. It brings to the forefront the idea that developing software is a collective effort, much like “Sales Efficiency” emphasizes the integrated nature of sales processes.

By placing “efficiency” at the core of our discussions, we start valuing:

The quality of code

The effectiveness of collaboration

The smoothness of workflows

All of the above cumulatively contribute to improved outcomes. Conversely, metrics built around the misguided concept of lone developers working in silos can erode team dynamics and morale.

Efficiency elevates. Productivity divides.

Getting visibility into a dev team’s workflows and lifecycle allows the team itself to organically improve efficiency. These improvements come from teams finding, diagnosing, and fixing their own bottlenecks and bringing new ways to collaborate to light.

The metrics that our research has shown align best to impact for most teams efficiency and predictable project delivery fall into three key areas: 1) DORA, 2) Efficiency, and 3) Predictability.

What’s the path to Engineering Efficiency?

As we’ve seen over the past couple of months, the concept of developer productivity is divisive - and for good reason. While the two major sides of the debate have largely come from a top-down perspective on what developer metrics should be, we think the right approach is collaborative. By working alongside CTOs, engineering managers, and developers daily and digging into the data, you can get buy-in on the 360-degree view that efficiency is a win-win for all involved.

It’s not about one perfect set of metrics—it’s about understanding the context of individual teams and applying the right framework to give them the visibility and workflow improvements they need to improve.

Start with industry standards, like DORA metrics—and gather the quantitative and qualitative signals you need to pick the right path for your team.

How can you start driving Dev Efficiency? Get your DORA metrics for free 👇

With a free LinearB account, you’ll get all four DORA metrics right out of the box. No limitations on contributors, repos, or team size.

Here’s what’s included:

All four DORA metrics — Cycle Time, Deploy Frequency, CFR, MTTR

Industry-standard Benchmarks to help you define team performance and set data-backed goals

Additional leading metrics, including Merge Frequency

and Pull Request Size (a great indicator of quality and efficiency)

Whys is it time to settle this debate? Where is the urgency coming from?