What language should LLMs program in?

Should LLMs write code in strongly typed languages, or do they need something new entirely?

A guest post by Lenny Pruss of Amplify Partners.

We're standing at an inflection point in software development. For decades, programming languages have evolved to serve one primary audience: human developers. We've optimized for readability, expressiveness, and cognitive accessibility—all reasonable goals when flesh-and-blood engineers are the ones crafting every line of code. But as AI agents increasingly take over the task of writing software, we're forced to confront a fundamental question: what if the languages we've spent decades perfecting are entirely wrong for the new way we’ll write code?

The signs are already emerging. Python and JavaScript have dominated the coding landscape not because they produce the most robust software, but because they mirror how humans think about problems. Their weakly typed, forgiving nature makes them approachable for developers learning their craft. But when the "developer" is a large language model with no cognitive limitations (beyond a few well-formed examples they’re trained on), these human-friendly design decisions start to look less like features and more like bugs.

Weakly typed vs. strongly typed languages for LLMs

Consider how we currently approach AI code generation. We prompt a model in natural language—itself an inherently ambiguous medium—and ask it to produce code in languages that were designed to be flexible and interpretable by humans. The result is a double dose of ambiguity: imprecise inputs producing imprecise outputs. It's like asking someone to translate a poem from one language to another, then wondering why the nuance gets lost.

This matters more than you might think. While JavaScript's loose typing might help a junior developer get something working quickly, it creates exactly the kind of uncertainty that makes AI-generated code unpredictable. When a human writes if (user.id), they understand the implicit type coercion happening behind the scenes. When an AI writes the same code, that understanding exists only in the statistical patterns learned during training—a far less reliable foundation.

This is only exacerbated by the fact that JavaScript is far and away the most popular programming language in the world. It’s likely that the majority of the code that mainstream LLMs were trained on is in JS, pulled from the myriad of example repos and StackOverflow (🥲) questions pervading the web. It’s already well understood that LLMs training on old/multiple versions of popular libraries has a negative impact on code generation; it’s possible the same is true with respect to the language itself.

Meanwhile, functional languages like Haskell and Erlang offer a compelling alternative framework. Their emphasis on immutability, strong typing, and mathematical foundations provides exactly the kind of constraints that could guide AI systems toward more reliable outputs. Where JavaScript says "figure it out as you go," Haskell says "prove it works before you run it." For an AI system, this difference isn't just philosophical—it's practical (more on this later).

The concurrent and distributed programming models built into languages like Erlang also align well with how AI systems naturally operate. These languages were designed from the ground up to handle complexity through composition and isolation, principles that become even more valuable when the complexity is being managed by statistical models rather than human intuition. Though they might be harder to write for humans, they may be essential for us to enforce with agents.

Formal verification and mathematically proving code

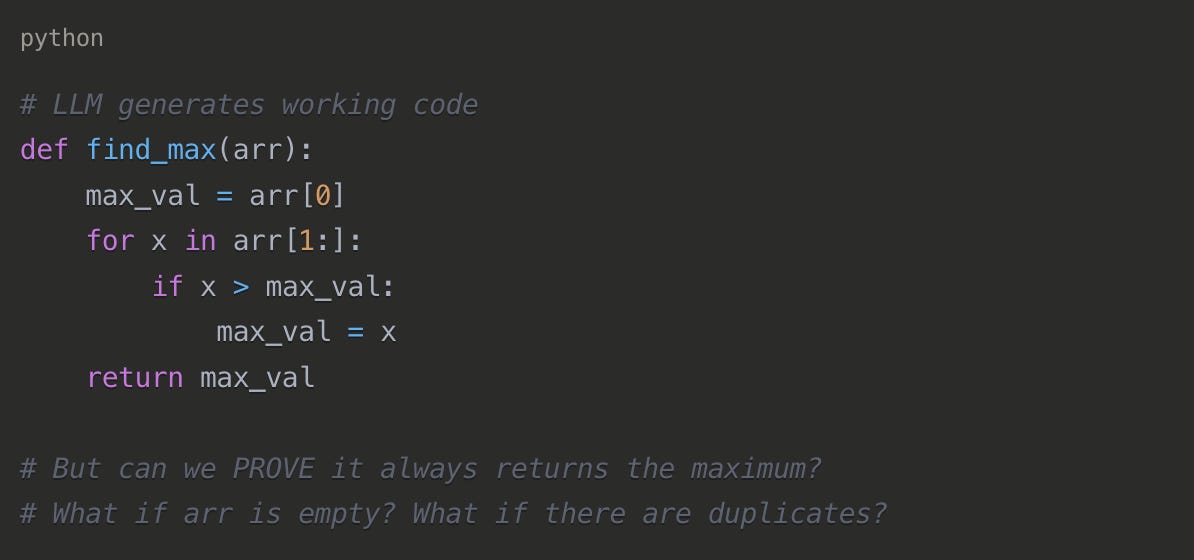

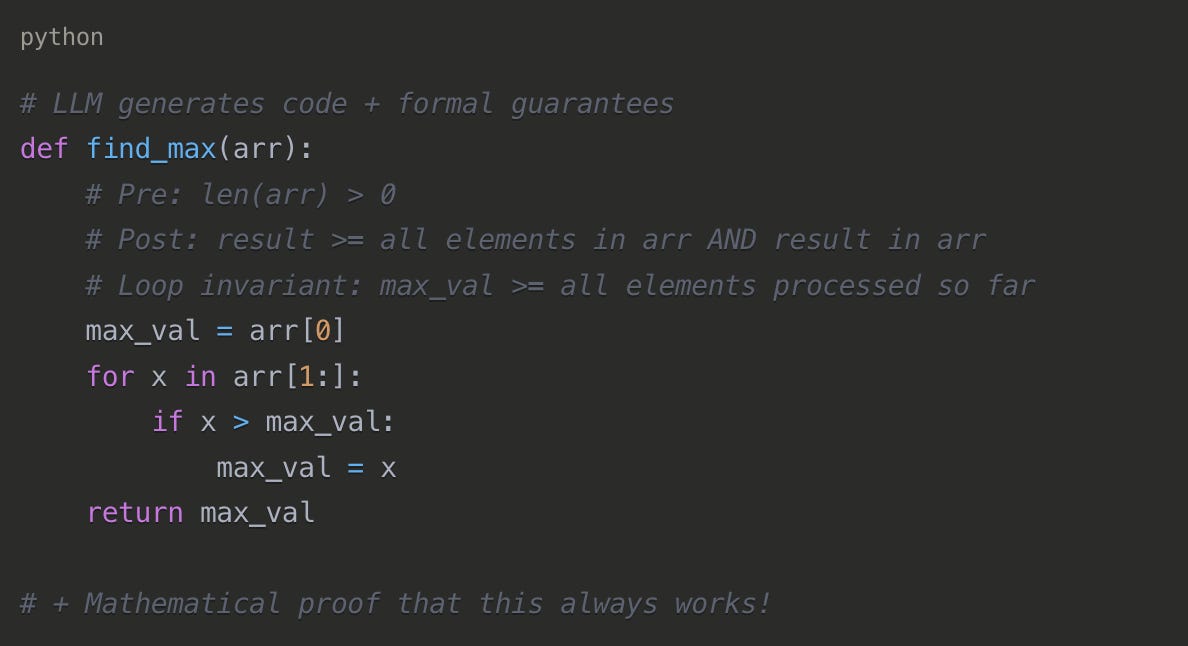

This brings us to an even more radical proposition: formal verification. While most engineers are familiar with unit testing—picking specific cases to validate expected behavior—formal verification takes a mathematical approach to proving correctness. Instead of checking that your code works for a handful of test cases, formal verification proves on a theoretical basis that it works for all possible cases.

The classic formal verification example involves something as simple as finding the maximum value in an array. A unit test might check a few different arrays and confirm the function returns the right answer. Formal verification would mathematically prove that the algorithm correctly identifies the maximum value for any valid input array, regardless of size or content.

This level of rigor has traditionally been reserved for mission-critical systems—aerospace, medical devices, financial infrastructure—where failure isn't just inconvenient but potentially catastrophic. The toolchain for formal verification reflects this niche status: languages like Dafny, SPARK, Rocq, and TLA+ remain largely research-oriented and in many ways impractical for everyday software development (though I hope this changes).

But as AI agents begin writing more of our code, I believe this discipline is going to become extremely relevant. The question isn't whether we can afford the overhead of formal verification—it's whether we can afford not to have it. When humans write code, we can rely on their understanding of context, intent, and edge cases. When AI writes code, we need stronger—in this case mathematical—guarantees.

The challenge lies in speed and practicality. Current formal verification approaches are too slow and cumbersome for the rapid iteration cycles that modern development demands. If we're going to ask AI agents to prove the correctness of every function they write, that process needs to happen in seconds, not minutes or hours.

Early research efforts are exploring how LLMs themselves might generate the theorems and proofs needed for verification, but these approaches are constrained by the scarcity of high-quality training data in formal methods. It's a chicken-and-egg problem: we need more formally verified code to train better verification models, but we need better verification models to make formal verification practical enough to produce that training data.

What if LLMs need an entirely new programming language?

Returning to the original question of this post, what if no existing languages are best for LLMs generating code? There might be a more fundamental opportunity: creating programming languages designed specifically for AI systems.

The current approach to AI code generation is remarkably inefficient when you think about it. We start with natural language (ambiguous), translate it into existing programming languages (still somewhat ambiguous), which then compile into machine code (finally unambiguous). That's at least three stages of compilation, each introducing potential errors and inefficiencies (remember the poem analogy from before).

What if we could collapse this pipeline? Instead of forcing AI systems to work within the constraints of human-designed languages, we could create languages that serve as more direct bridges between natural language intent and machine execution.

Work is already happening here. I met recently with a group out of MIT that used an LLM to create an entirely new programming language—one that had never existed before their project. The language is constrained and specialized, but after showing their AI system just a few examples, it could reliably generate code in this new syntax. The results were more predictable and verifiable than equivalent code generated in traditional languages.

This isn't just a theoretical exercise. The constraints built into such a language could enforce the kind of formal properties that make verification practical. By designing the language to eliminate entire categories of errors at the syntactic level, we could dramatically reduce the verification burden while improving the reliability of AI-generated code.

DSPy and the idea that LLMs are not actually black boxes

The implications extend beyond just the languages we use for final code generation. Projects like DSPy are exploring whether natural language is even the right interface for prompting AI systems in the first place. Instead of asking models to interpret free-form text instructions, DSPy provides structured programming interfaces that let us compose AI capabilities more reliably.

This represents a broader shift in thinking about AI systems. Rather than treating them as black boxes that we communicate with through natural language, we might be better served by developing more structured, programmatic interfaces that reduce ambiguity and increase predictability.

The parallel to traditional software development is striking. Early programmers worked directly with machine code, then assembly language, then increasingly abstract high-level languages. Each abstraction layer made programming more accessible to humans while maintaining or improving the reliability of the underlying systems. We may be approaching a similar transition point with AI, where the most effective interfaces aren't necessarily the most human-like.

Looking forward

The programming languages of tomorrow will likely look very different from those of today. They'll prioritize mathematical rigor over human readability, formal guarantees over flexible expressiveness, and compositional clarity over syntactic sugar. This isn't about making programming harder—it's about making it more reliable when the programmers are artificial intelligences with fundamentally different strengths and limitations than human developers.

The transition won't happen overnight, and it won't be universal. There will always be contexts where human-friendly languages remain the right choice. But as AI agents take on an increasingly central role in software development, the languages and tools we use will inevitably evolve to serve their unique capabilities and constraints.

Regardless of the presentation (a.k.a. syntax) the important thing is that programs should be described and generated in a controlled way. This is already done by the "Formal verification and mathematically proving" that is mentioned. You should not discard it only because of performance problems. If verification time is the problem, then your description of what you want is too ambitious. You should refine your requirement description to gain more generation/verification performance.