Nobody is shipping your agent’s code (yet) | Predictions from LinearB’s Ori Keren

The lobster in the room, a software survival guide, and why vibe coding is a slot machine.

AI has successfully solved the blank page problem for developers, but it has created a massive new bottleneck downstream in the SDLC. LinearB CEO Ori Keren joins us to explain why 2026 will be a year of norming as organizations struggle to digest the flood of AI-generated code. In this annual prediction episode, he details why upstream velocity gains are being lost to chaos in reviews and testing. We also discuss why enterprises aren't ready to hand over the keys to autonomous agents and how to build dynamic pipelines based on risk.

1. OpenClaw is the lobster in the room

Whether you call it Moltbot or OpenClaw, it’s impossible not to talk about “the lobster in the room.” This new open-source project wires Claude Code directly into your local machine with unprecedented access and power. It gives the AI hooks into your daily applications and turns it into a superpowered virtual assistant. While this marks a mainstream moment for agentic AI, neither Ben Lloyd Pearson nor I would ever run this thing on our actual laptops. (Be like everyone else, and get a Mac Mini or a VPS!)

Read: Moltbot / OpenClaw Repository

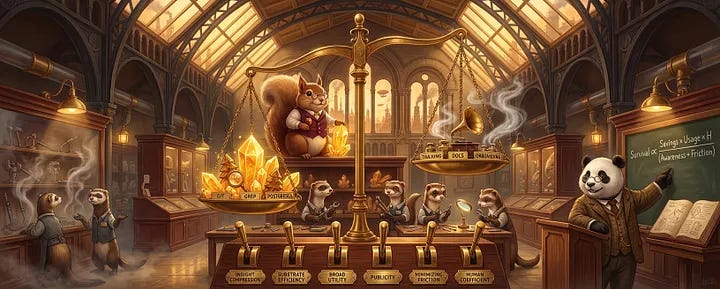

2. The new Survival Ratio for software

Steve Yegge is back with a framework for the AI era. He argues that software survival now depends on saving cognitive effort, measured in tokens, energy, or money. He introduces the “Survival Ratio” and suggests that tools like grep survive because they offer “substrate efficiency” by punching above their weight class on a CPU in a way a GPU never can. For SaaS founders, the warning is stark. The build vs. buy equation has changed. If a developer can build 80% of a niche SaaS product in a day, the only surviving moat is “insight compression,” which means holding unique data or logic that an agent cannot easily replicate.

Read: Software Survival 3.0

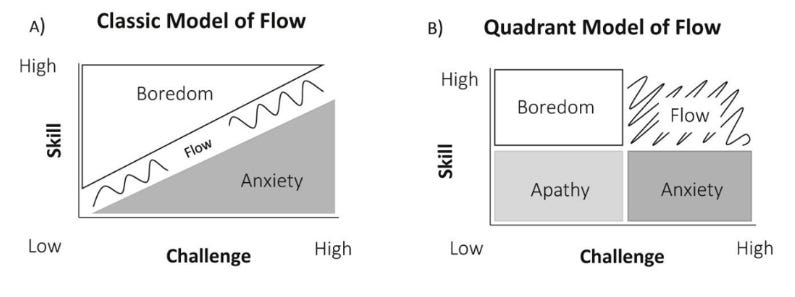

3. The dark flow dragging down AI productivity

Remember that METR study that found that while developers felt 20% faster using AI tools, they were actually 19% slower? This discrepancy is attributed to “dark flow.” This is a gambling-like state where the “celebratory noise” of generating massive amounts of code disguises the reality of hidden bugs and unmaintainable complexity. We are seeing diminishing returns on raw model performance. The solution is building the infrastructure and testing to turn that chaotic energy into actual velocity.

Read: Breaking the Spell of Vibe Coding

4. Anthropic publishes Claude’s constitution

Anthropic has published the “constitution” that governs Claude’s behavior, released under a Creative Commons license. It outlines core values like helpfulness and safety, but with fascinating nuances. The guidelines seem to encourage the model to act as if a thoughtful senior Anthropic employee were looking over its shoulder. We view this as Anthropic hedging their bets for a future where models might genuinely understand these constraints, while also planting a flag in the sand regarding their ethical intent.

Read: Claude’s new constitution

5. AI drains the CUDA moat?

A Reddit user claims to have ported an NVIDIA CUDA backend to AMD’s ROCm in just 30 minutes using Claude Code. While we must take such claims with a grain of salt regarding complexity, the implication is profound nonetheless. If AI can act as a universal translator for kernels, the “moat” of proprietary ecosystems evaporates. This aligns with what Anush Elangovan discussed when I sat down with him on Dev Interrupted regarding ROCm’s open-source architecture. In this world, open platforms gain a distinct advantage as open source becomes the path of least resistance for agentic porting.

Watch: Speed is the moat with Anush Elangovan

6. Quantifying the podcast earworm

Speaking of past guests, Thiago Ghisi released a data deep dive into his 2025 listening habits. He analyzed insights per minute across his podcast feed, and Dev Interrupted made the list. It is a fun example of applying agentic analysis to personal unstructured data. He effectively turned a year’s worth of passive listening into a structured knowledge graph.

Read: My Top 25 Podcast Episodes & Interviews from 2025 by IPM (Insights Per Minute)