From Kubernetes to AI maximalism | Stacklok's Craig McLuckie

Feeding parrots, the "Glue Person" trap, and the dishwasher’s 100 year journey.

When you co-create Kubernetes, you earn the right to have strong opinions on the next platform shift. This week, Ben Lloyd Pearson sits down with Craig McLuckie, Co-founder & CEO of Stacklok, who is advocating for a shift in leadership mindset. He argues we need to move from asking if we can use AI to demanding to know why we can’t. Listen to hear why he believes an “AI maximalist” philosophy is the only way to survive the next cycle.

1. Stop vibe coding and start engineering

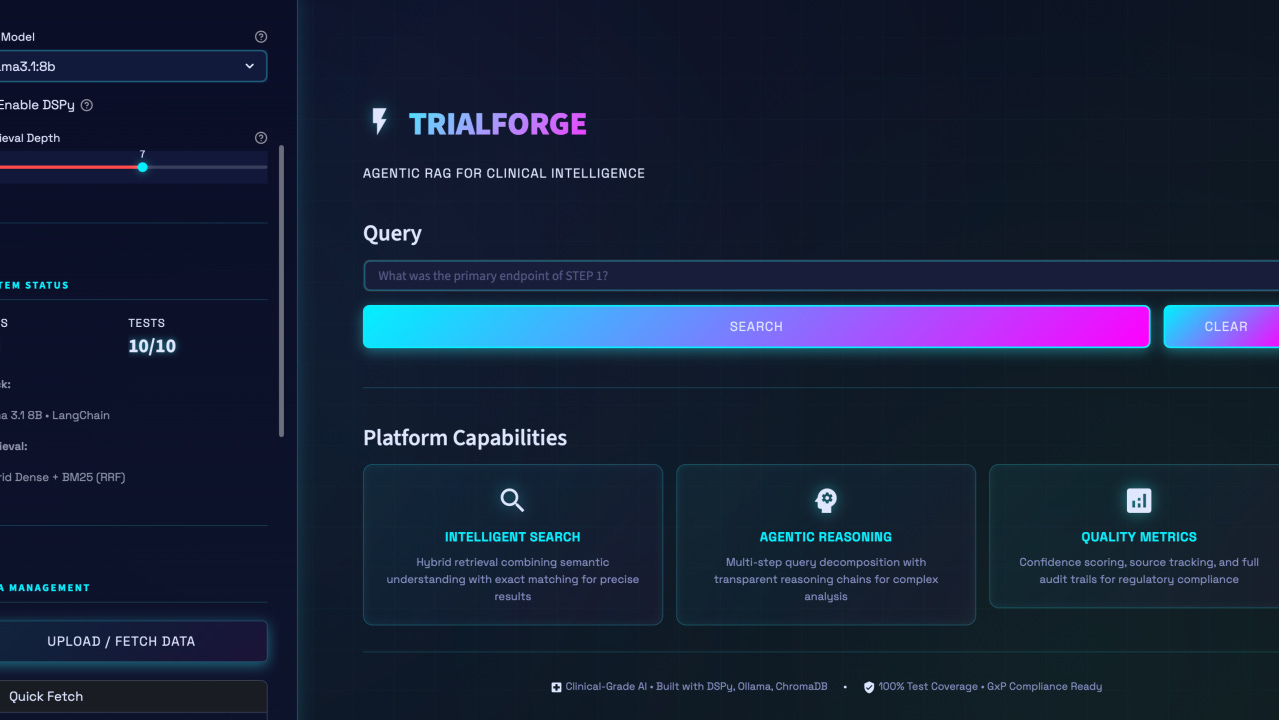

AI models are inherently probabilistic. They guess. That is a non-starter in high-stakes fields like healthcare or clinical research. Josh Phillips, argues that we need to force LLMs to be reproducible and auditable using finance-grade rigor. This means moving beyond vibes to measurable quality metrics like groundedness and answer relevance. As Ben and Andrew noted on the podcast, if you can’t trace the logs and audit trails of your AI pipeline, you aren’t building software. You are just feeding a parrot and hoping it talks back.

Read: Making Probabilistic Systems Deterministic

2. AI is a steroid for the Dunning-Kruger effect

LLMs are confidence engines rather than knowledge engines. They will hallucinate a wrong answer with the same authority as a correct one. This is dangerous for junior engineers, but it is also a trap for leadership. As the 2025 DORA report suggests, AI acts as a mirror that amplifies both the good and bad habits already present in your org. The solution is to use LLMs Socratically. Don’t ask them for the answer. Ask them to challenge your answer. Force the model to act as a critic instead of an oracle.

Read: LLMs are steroids for your Dunning-Kruger

3. Replace the Glue Person with composable workflows

Every team has a glue person. This is usually a top performer who gets buried under the toil of coordination, weekly reports, and ticket hygiene. It is a fast track to burnout. The answer isn’t just more automation, but composable workflows. At Dev Interrupted, we’ve started viewing our role as AI wranglers. By defining every input and output so they plug into the next step, we can chain weekly rituals together. The goal isn’t just speed. It is reclaiming the focus of your best people.

Read: The Invisible Load: How AI Workflows Can Replace Your Team’s ‘Glue Person’

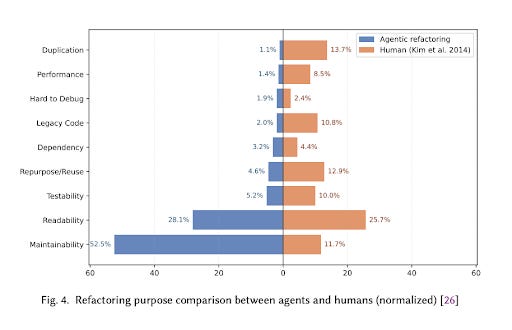

4. AI Agents: Great at cleanup, bad at architecture

New research analyzing over 15,000 refactorings across 1,600+ repositories reveals the current ceiling of AI coding agents. They are incredible at low-level cleanup and readability, achieving an 86.9% PR acceptance rate. However, they struggle significantly with high-level architectural changes. The data suggests a clear playbook for engineering leaders. Use agents for routine maintenance, but enforce separate PRs for agentic work. Don’t let an AI refactor ride along on a feature PR because it muddies the waters. Keep the architectural vision human-led and let the bots handle the broom.

Read: AI coding agents and refactoring research

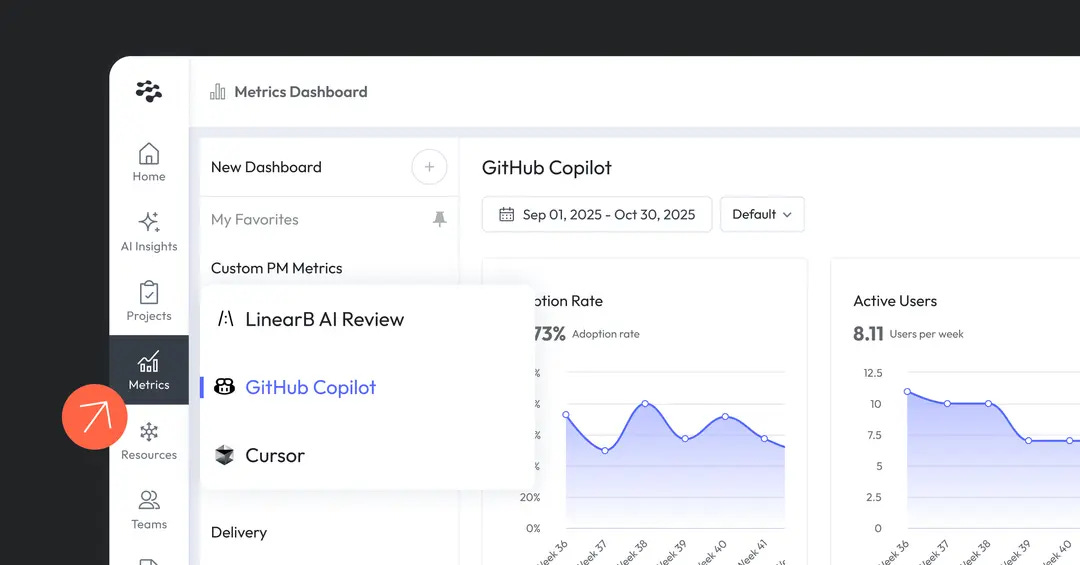

5. The missing link for Copilot & Cursor

Engineering leaders are doubling down on AI, but the big question remains: How do you actually measure the impact? With LinearB’s new Copilot and Cursor dashboards, you finally can.

Bring all your AI assistant metrics—adoption, acceptance rates, and engagement—into one view and connect them directly to delivery outcomes. Don’t just buy tools; understand how deeply they are integrated into your SDLC and where trust is growing. Turn your AI data into real engineering insights.

Read: Measuring the impact of Copilot and Cursor on engineering productivity

6. The “Genie” just speeds up the crash

Why does every software project eventually slow to a crawl? Kent Beck offers a sobering answer: every feature you ship burns optionality—the future flexibility of your code. When you code with a genie (AI), you aren’t escaping this dynamic; you are simply compressing the timeline. You hit the complexity wall faster. Beck argues that the only way to sustain velocity is to respect the rhythm of exhaling and inhaling. You must tidy (restore options) before you implement the next feature (burn options). If you don’t, the genie will just help you build an unmaintainable legacy codebase in record time.

Read: Why does development slow?

7. The 100-year adoption curve

Soap is ancient, but the dishwasher is surprisingly new. The first patent for a table furniture cleaning machine didn’t arrive until 1850, and it was a clumsy, hand-cranked tub that merely splashed water on dishes. It wasn’t until 1886 that Josephine Cochrane built the first commercially successful machine using pressurized water, yet it found its home in hotels and the World’s Fair rather than kitchens. It took decades for infrastructure to catch up, reminding us that transformative tech often lingers in a B2B niche or “hand-cranked” phase long before it changes daily life for everyone else.

Read: Inventing the dishwasher