Faster coding isn't enough

AI's untapped power in software development

Coding assistants and inline suggestions have dominated the conversation around AI in software development. However, as engineering organizations mature their AI strategies, a more nuanced picture reveals untapped opportunities and unexpected bottlenecks across the entire software development lifecycle.

Dev Interrupted partnered with LinearB on research to survey over 400 engineering leaders and developers, showing that while AI adoption in coding workflows has reached significant penetration, other critical phases of the SDLC remain surprisingly underutilized.

To explore what this means for engineering leadership, I (Andrew Zigler) brought together three experts at the forefront of AI-driven development:

Suzie Prince, Head of Product for Compass at Atlassian

Birgitta Böckeler, Global Lead for AI Software Delivery at Thoughtworks

Adnan Ijaz, Director of Developer Agents and Experience at AWS, who leads development of Amazon Q Developer

Why AI acceleration isn’t a straight line

You can visualize the current state of AI adoption across the SDLC like a river flowing from project planning and requirements through coding, review, and release. In some areas, the river runs deep and fast; in others, it's surprisingly shallow, creating bottlenecks that can undermine the benefits of accelerated development elsewhere.

"The early days of AI-driven software development featured inline coding workflows. You're writing code and AI is helping you complete the code faster," explains Ijaz. "While it has been valuable, and that is where we won a lot of traction early on with the AI tools, coding is a relatively small part of everything a developer does in the entire software development lifecycle."

This observation points to a fundamental challenge: organizations have focused their AI investments on the most obvious lever, code generation, while leaving other critical workflow areas largely untouched. The result is a system under increasing pressure, where accelerated coding throughput meets traditional planning, review, and deployment bottlenecks.

The most significant bottleneck in AI-enhanced development workflows occurs during the code review and release approval phases. While AI can dramatically increase the generated code volume, traditional review processes remain largely manual, creating new pressure points in the development pipeline.

These manual workflows create what might be called the "approval paradox." Organizations that successfully accelerate development through AI may be overwhelmed by the review and release management burden. The solution requires rethinking approval workflows, potentially incorporating AI-assisted review processes while maintaining appropriate human oversight for critical changes.

Your engineering organization should balance the efficiency gains from automated approvals with the quality assurance from human review, particularly for security-sensitive or architecturally significant changes.

What the research reveals about SDLC AI adoption

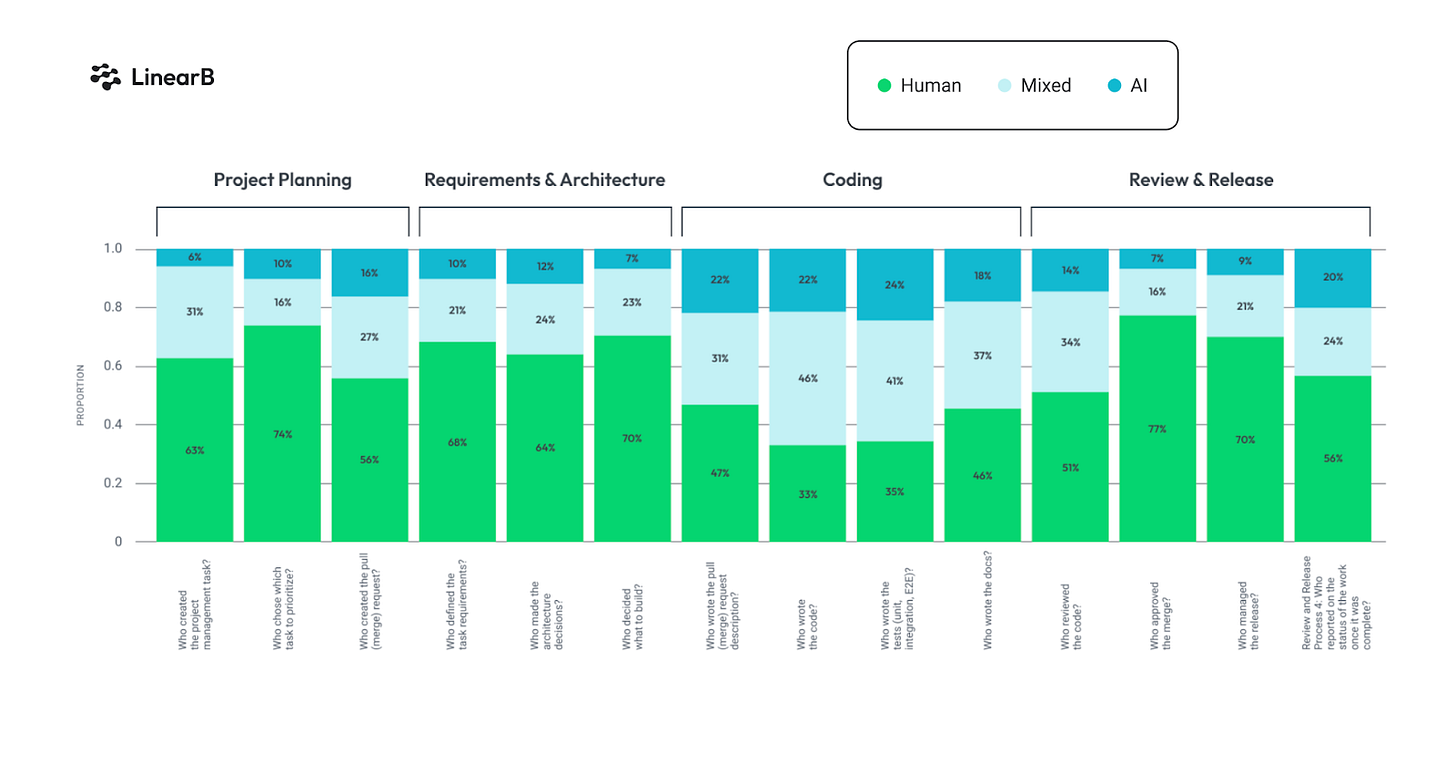

Dev Interrupted research confirms this. For the study, we broke the software delivery process into 14 steps across planning, requirements gathering, coding, and release. We asked participants to consider their most recent pull requests, and for each step of the process, we asked participants who completed the task: a human, AI, or both.

We asked the following questions:

Who created the project management task?

Who chose which task to prioritize?

Who created the pull (merge) request?

Who defined the task requirements? Who made the architecture decisions?

Who decided what to build?

Who wrote the pull (merge) request description?

Who wrote the code?

Who wrote the tests (Unit, Integration, E2E)?

Who wrote the docs? Who reviewed the code?

Who approved the merge?

Who managed the release?

Who reported on the status of the work once it was complete?

The research data reveals a stark picture of where AI adoption has succeeded and where significant gaps remain.

Coding phase shows strong AI integration

AI has achieved substantial penetration in core development activities. Regarding writing code, only 33% of developers rely solely on humans, while 67% use AI either wholly or partially. Documentation and testing show similar patterns, with most developers using AI to support or handle these responsibilities. Even pull request descriptions have seen high AI adoption, with 53% of teams using AI assistance.

Requirements and Architecture remain largely human-led

However, the upstream phases tell a different story. Task creation is still 68% human-led, architecture decisions remain 64% human-driven, and the critical question of "what to build" is still 70% human-determined. These numbers suggest that teams trust AI with implementation but hesitate to involve it in strategic decision-making.

Review and Release present the biggest bottleneck

Perhaps most telling are the approval phases. Code review remains 51% human-only, while merge approval is 77% human-controlled. Similarly, human workflows constrain release management, with 70% of releases being human-led. This requirement for human intervention creates the exact bottleneck scenario we discussed earlier: AI can accelerate code creation, but traditional gatekeeping processes haven't evolved to handle the increased throughput.

This data reveals a clear picture: teams have successfully adopted AI where the risk is low and the output is measurable (coding, documentation, testing), but remain cautious about areas requiring judgment, creativity, or strategic thinking. The question for engineering leaders is whether this caution is justified or whether it's leaving significant value on the table.

AI’s frontier beyond code completion

The reality of developer work extends far beyond writing code. Developers spend most of their time on activities outside of coding: planning, documentation, code reviews, and maintenance. This presents both an opportunity and a strategic imperative for engineering leaders.

“One of the stats I've seen is that 80% of coding time for a developer or the time that a developer spends is not coding. It's planning, it's documentation, it's reviews, it's maintenance. I think that's what you're seeing here, that there are opportunities… to transform different friction points in that lifecycle." —Suzie Prince

The shift toward agent-based AI represents a significant evolution from simple auto-completion tools. Rather than just helping developers write code faster, these systems can automate entire workflows to generate documentation, create comprehensive unit tests, and even perform complex troubleshooting tasks. Organizations like Deloitte have reported reducing unit test time by 70% through agent interactions, while others have compressed documentation cycles from weeks to days.

This evolution requires engineering leaders to think systematically about where AI can significantly impact their specific workflows, rather than simply adopting the latest coding assistant.

The evolution toward agentic AI represents a significant shift in how developers interact with AI systems. Rather than simple auto-completion, these tools can perform complex, multi-step operations with minimal human intervention.

"I asked it to look at all my AWS deployments and tell me how many S3 buckets I have... It came back and said, 'I can do that for you, but you don't have the AWS CLI installed. Do you want me to install it for you?'" —Adnan Ijaz

This level of autonomous operation extends beyond coding into areas like debugging, system administration, and even architectural analysis. However, it raises crucial questions about oversight, security, and the skills developers need to maintain as AI capabilities expand.

Balance AI innovation with engineering discipline

Implementing AI across the SDLC requires a cultural approach that embraces experimentation and healthy skepticism. The most successful organizations create environments where enthusiasts and skeptics can contribute to learning.

"The enthusiasts need to pull the skeptics up, and the skeptics need to pull the enthusiasts a little bit down to earth." —Birgitta Böckeler.

This balanced approach is crucial because AI tools amplify existing processes indiscriminately. AI can significantly enhance quality and velocity in organizations with strong development practices. However, AI may accelerate the creation of technical debt or security vulnerabilities in systems with underlying issues.

The sparring partner model, where AI serves as a thought partner rather than a replacement, has emerged as particularly effective. Developers use AI to explore different approaches, validate architectural decisions, and think through complex problems, while maintaining ownership and accountability for the outcomes.

One of engineering leaders' most significant challenges is managing expectations around AI productivity gains. The focus on percentage improvements and speed metrics can create counterproductive pressure that leads to corner-cutting and quality degradation.

"Part of responsible leadership right now is to not put these high pressures on teams. Do you have to be faster now because you have AI? Because then they're gonna cut corners," warns Böckeler. "If you put that pressure on, then people are going to find ways to be faster by gaming the metrics or cutting corners."

This pressure can manifest in various ways: developers may accept AI suggestions without proper review, skip testing phases, or bypass security checks in pursuit of velocity metrics. The result is often a false economy where short-term gains in development speed are offset by increased debugging time, security incidents, or technical debt.

Engineering leaders must also address knowledge gaps among technical teams. Many developers still don't fully understand the implications of using AI tools, from data privacy concerns to the reality that most AI interactions involve sending code to third-party servers.

The playbook to safely scale AI-driven software development

Implementing AI responsibly requires clear organizational guidelines while maintaining the flexibility needed for experimentation. The key is creating frameworks that encourage innovation while maintaining appropriate safeguards around data handling, code quality, and security.

"AI is becoming foundational to how we work," observes Prince. "And the real opportunity is not replacing people. It's about amplifying individuals, amplifying creativity, and improving the flow through the system."

This amplification approach requires several key elements:

Transparency and ownership: Clear accountability for AI-generated outputs, with humans responsible for review and validation.

Tool selection criteria: Guidelines around which AI tools are approved for use, considering factors like data handling, security scanning, and license compliance.

Progressive experimentation: Start with low-risk use cases and gradually expand scope as teams build confidence and competency.

Continuous learning culture: Regular sharing of successes, failures, and best practices across the organization.

Want to benchmark your team's AI adoption? The DevEx Guide to AI-Driven Software Development is your next step. Building on the themes in this article, the guide introduces the AI Collaboration Matrix: a practical framework for mapping where AI is shaping your workflows, and where it's not. It breaks down common adoption pitfalls, highlights how companies like Meta and Google are operationalizing AI beyond Copilot, and offers strategic advice for every stage of the SDLC. If you're serious about moving from isolated tooling to AI-powered transformation, this guide is your blueprint.

Build an AI-ready organization

The conversation around AI in software development is shifting from tactical tool adoption to strategic workflow transformation. For engineering leaders, this transition requires several key considerations:

First, resist the temptation to optimize solely for speed. Organizations that see the most sustainable benefits from AI focus on overall system improvement rather than individual productivity metrics.

Second, invest in cultural change alongside technical adoption. The most successful AI implementations happen in environments that encourage thoughtful experimentation while maintaining high standards for quality and security.

Finally, think systematically about bottlenecks across the entire SDLC. The gains from AI-accelerated coding can be undermined by manual processes elsewhere in the pipeline, requiring a holistic approach to workflow optimization.

As AI capabilities evolve, organizations that can effectively integrate these tools while maintaining the human judgment, creativity, and accountability that define exceptional software development will gain a competitive advantage. The future isn't about replacing developers with AI; it's about amplifying human capability across every aspect of the software development lifecycle.

Watch the complete workshop hosted by Andrew Zigler here: