2024 is the year GenAI code hits adolescence

How to prepare software teams to handle the increased output of GenAI

Until now, generative AI in software development has been in its infancy. While maturing, it has lacked autonomy and required constant supervision from developers. It could not independently make significant contributions without human oversight. In 2024, the use of Generative AI in software development will reach adolescence – by the end of the year, GenAI is projected to be responsible for generating 20% of all code – or 1 in every 5 code lines.

With 87% of software engineering leaders surveyed likely to or already investing in GenAI coding tools for 2024, the rising number of training inputs will make GenAI code assistants increasingly effective. Like a child learning how to navigate the world, GenAI will learn from past data and adapt its behavior with less of a need for immediate input and a deeper understanding of each company’s individual needs.

Even before this technological maturation, GenAI’s ability to increase the pace of code generation has led to a rush of new code hitting the software development pipeline — exacerbating existing bottlenecks within the rest of the software delivery process.

To prepare our teams to handle the increased output GenAI enables in code, test generation, and documentation, we have to answer three key questions:

Do we have the right talent and systems in place?

Are we set up to innovate with AI?

What’s the impact of GenAI code?

How will your team adapt to the increased velocity of code creation?

Most developers don’t like to review code; they like writing it. Unfortunately, this means increased AI-generated code will overload many organizations' software development pipelines. If we don’t have developers skilled in code review and comprehension, we could see a significant uptick in development bottlenecks.

The answer to this predicament lies in a combination of solutions. We must both upskill existing developers and prioritize code review as a frontline skill for the next generation of developers, all while improving code review automation and ensuring GenAI is trained for code quality.

Upskilling + Training:

We need to prioritize training as part of devs' everyday jobs to upskill current developers. The technology landscape is changing faster than ever; we cannot view learning new skills as “going above and beyond.” Consider scheduling regular trainings for your engineering team that cover topics like code review best practices, how to spot common errors in AI-generated code, and new tools to assist in code review.So many amazing coding schools worldwide have appeared in recent years, and universities have sought to increase the size of their computer science programs. As a result, we’ve seen a huge influx of new developers, with 48% of respondents to Stack Overflow’s 2023 developer survey having been coding for less than ten years. We must continue to make upskilling accessible to all while also focusing on code review and comprehension as a core competency. Doing so will ensure the next generation of developers is equipped for future development processes.

Code Review Automation: Businesses and developers must invest in software pipeline automation on top of upskilling. The importance of code review can be reinforced via Policy-as-Code tools for the growing dev workforce. For example, we developed our free Continuous Merge tool gitStream to help businesses improve their software development lifecycle(SDLC). Using YAML files to hard-code their code review policies, gitStream users can standardize quality and efficiency best practices across their entire engineering organization. This automates unique merge practices that are critical to an organization’s success. There is also massive potential for AI-powered workflows within the code review process, which some major companies are already experimenting with.

GenAI Code Quality: We must focus on training GenAI integrations for code quality to remove extraneous burdens placed on developers. Leaders can accomplish this by prioritizing code that strictly observes the unique quality standards of your organization as training data and by creating room for the review and annotation of training data early on. These steps take extra time in the early stages of your AI model training but pay dividends in the long run. With these foundations laid, engineering teams can expect higher quality AI-generated code, reducing the amount of review and remediation required from developers in the long run.

Will AI cause developers to lose the ability to innovate?

Yes, IF we don’t put guardrails in place now to proactively maintain innovation within our organizations.

GenAI relies on training data to inform its outputs; it’s not currently designed to produce new, innovative code. On the human side, innovation will decrease if we over-rely on GenAI to generate code. If developers aren’t creating code with their own hands, we’ll miss out on discovering new needs and ways of doing things. Accordingly, training data will remain stagnant. It’s a cyclical issue.

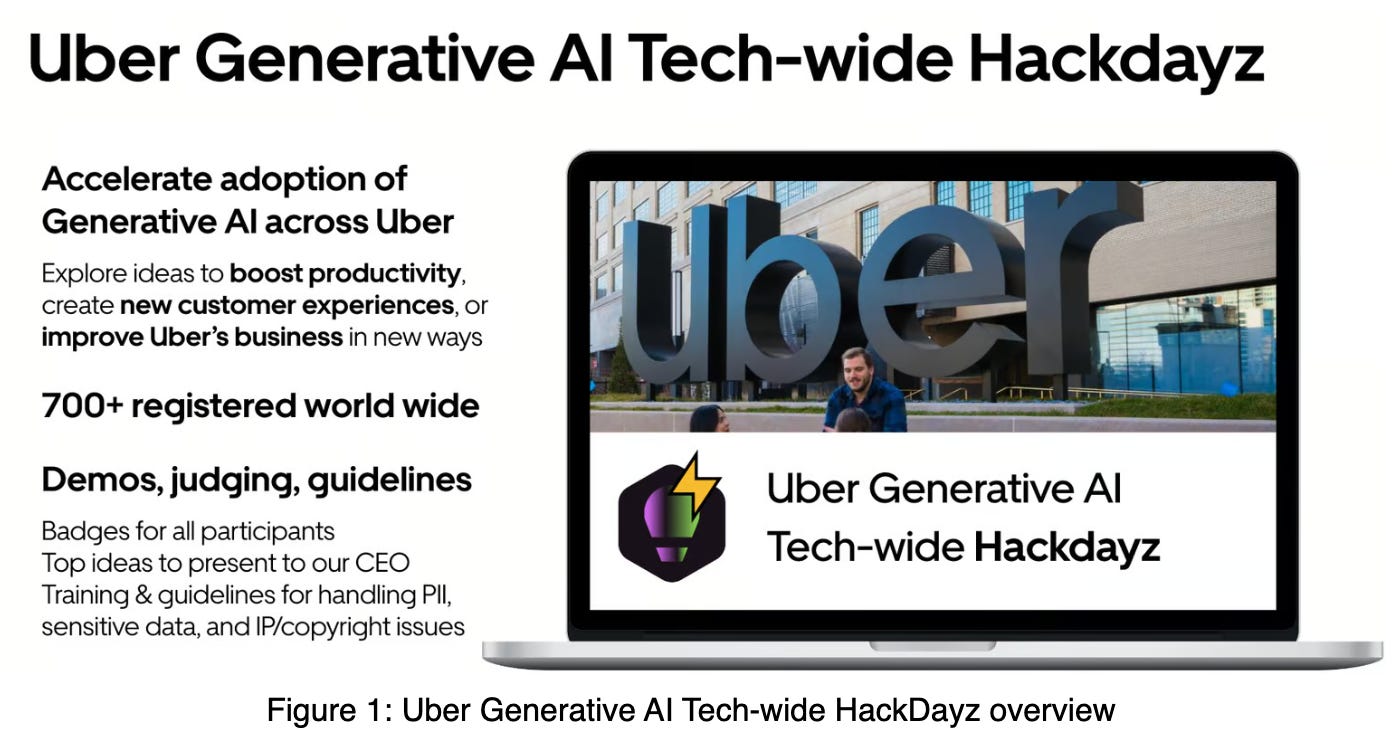

There is a risk that devs will lose their innovation ability unless we actively foster cultures of experimentation and exploration. For example, Uber is driving innovation in its organization by hosting “Uber Tech-wide Hackdayz” where teams delve into the world of GenAI to uncover endless opportunities (98 new project demos, to be exact) for the business.

AI-generated code can’t be the end-all-be-all of development work—and velocity can’t be our sole focus. We must maintain a hybrid approach to code creation by using GenAI to augment development work, not replace it. When your engineering team views AI through this lens, they’ll use it for repetitive, mundane work such as writing tests and documentation, which gives devs time back for complex, high-leverage tasks.

Lastly, encourage knowledge sharing across your engineering team. Enable developers to learn from each other and drive innovation within the organization. You can’t learn from AI when it’s simply learning from the training data you gave it — your people are your biggest assets when it comes to pushing boundaries in the SDLC.

How do we track the impact of AI-generated code?

You won’t truly be able to tell if your dev team is delivering unless you’re tracking metrics around the success of your AI code, alongside gathering qualitative survey data from your engineering team. Without specifically tracking AI-generated code, we won’t be able to accurately measure its effects, both negative and positive. It’s impressive that we can improve the speed of code creation with AI, but it doesn’t help us much if we can’t understand where to press the peddle and where to ease off. We need to understand the effectiveness of AI code and how it correlates to improved software delivery before we can make it truly impactful.

We can appreciate code effectiveness using several quantitative metrics, including GenAI adoption (PRs opened, PRs merged), the benefits you’re getting from AI-generated code (merge frequency, coding time), and the risks behind GenAI code (PR size, rework rate, review depth, PR merged without review, review time). These metrics all point back to the importance of a well-rounded engineering metrics program as part of understanding how to improve our teams.

The Accelerate State of DevOps Report 2023 provided qualitative data indicating improved dev happiness as AI automates friction points in the SDLC. Unfortunately, it’s currently unclear whether those using AI in the development process saw improved software delivery quality or speed. We haven’t tracked metrics around AI-generated code for long enough to fully understand its impact (yet).

However, we can now rectify this telemetry gap through PR labeling that follows AI-created or assisted code in the pipeline. This helps engineering teams identify where code is getting stuck and determine how to unblock AI-generated code so that it is delivered faster. Leaders can drive engineering efficiency across the team by integrating metrics that track AI-generated code into their ever-so-critical engineering metrics programs.

With this new telemetry + survey signal(like our new GenAI Impact Report), we can get a more complete picture that:

Helps us make better decisions on how to adjust systems/processes

Highlights further automation opportunities - coderabbit.ai is an interesting example

Justifies the tool investment to the org

The ability to track the ROI of GenAI code with hard data is currently in beta at LinearB - and we’re releasing it to the world on January 25th, along with our report detailing the metrics and data that show the impact of GenAI on the software development lifecycle.

If you want access to the data and the ability to track the ROI of your GenAI code initiative, you can join us and ThoughtWorks’ Global Lead for AI Software Delivery for a free workshop Thursday, January 25th, or on January 30th, where we’ll share:

Data insights from LinearB’s forthcoming GenAI Impact Report

Case studies into how others are already doing it

Impact Measures: adoption, benefits & risk metrics

A Live demo: How you can measure the impact of your GenAI initiative

End result: a free-flowing development pipeline

Every organization wants high-quality, predictable delivery. And it’s been proven that faster code reviews lead to better software delivery. Google’s DORA research indicates that teams with faster code reviews perform 50% better on software delivery.

Yet, code reviews are a huge friction point in the SDLC, especially when organizations are overrun with AI-generated code. To make AI-generated code truly beneficial to organizations, we must equip reviewers with the tools to create a rapid flow of code through the software delivery pipeline while ensuring that time saved on code generation is put towards high-leverage activities.

To truly improve code review and accommodate the influx of AI-generated code, we must prepare reviewers with upfront context around the code they’re reviewing, implement code review automation tools to reduce manual tasks, and both measure + revise code review processes regularly through team-based metrics.

Only when we fully understand the impact of GenAI in software development and equip devs with the right toolset and processes to work alongside GenAI will these tools fully mature into adulthood as an essential and valuable contributors to software delivery.